How Hyperscale Data Centers are reshaping India’s IT

In today’s times, a common question arises while discussing technology: what is the difference between Data Centers and Hyperscale Data Centers?

The answer: Data Centers are like hotels – the spaces are shared with multiple guests, whereas, in the case of Hyperscale Data Centers, the entire building/campus are dedicated to a single customer.

Companies like Amazon, Google, Microsoft, Facebook, and OTTs, which have millions and millions of end-users, have infused their services into our day-to-day life to cater to our personal and professional needs. Data centers are the backbone of this digital world.

This is where Hyperscale Data Centers come into play and provide seamless experiences to such massive end-users.

The term Hyperscale means the ability of an infrastructure to scale up when the demand for the service increases. The infrastructure comprises of computers, storage, memory, networks etc. The maintenance of such infrastructure is not an easy task. Constant monitoring of the machines, the server hall temperature and humidity control check and other critical parameters are monitored 24×7 by the Building Management System (BMS).

Data Centers are important because everyone uses data. It is safe to say that perhaps everyone, from individual users like you and me to multinationals, used the services offered by data centers at some point in their lives. Whether you’re sending emails, shopping online, playing video games, or casually browsing social media, every byte of your online storage is stored in your data center. As remote work quickly becomes the new standard, the need for data centers is even greater. The cloud data center is rapidly becoming the preferred mode of data storage for medium and large enterprises. This is because it is much more secure than using traditional hardware devices to store information. Cloud data centers provide a high degree of security protection, such as firewalls and back-up components, in the event of a security breach. The COVID-19 pandemic paved the way for the work-from-home culture, and the global internet traffic increased by 40% in 2020

Also, the rise of new technologies like the Internet of Things (IoT), Artificial Intelligence (AI), Machine Learning (ML), 5G, Augmented Reality (AR), Virtual Reality (VR) and Blockchain caused an explosion of data generation and an increased demand for storage capacities.

Cloud infrastructure has helped businesses and governments with solutions to respond to the pandemic. To cater to such needs, the demand for cloud data center facilities has increased. A heavy infrastructure with a lot of power is needed to cater to such needs.

Data Centers have quite a negative impact on the environment, because of the large consumption of power sources and has 2% of the global contribution of greenhouse gas emissions. To reduce these carbon footprints and work towards a sustainable environment, many data center providers globally have started using power from renewable energy sources like solar and wind energy through Power Purchase Agreements (PPA). The Data Center power consumption can be lowered by regularly updating their systems with new technologies and regular maintenance of the existing infrastructure.

The Indian market will see multifold growth in the Data Center industry due to ease of doing business in the country and thanks to the attractive subsidiaries provided by the state governments, huge investments are committed in the next four years.

Interesting facts about Data Center:

- A large Data Center uses the electricity equivalent to a small Indian town.

- The largest data center in the world is of 10.7 million sq.ft. in China, approximately 1.5 times of the Pentagon building in USA.

- Data Centers will nearly consume 2% of the world’s electricity by 2030. Hence, the Green Data Center initiatives are taken up by various organizations.

The future of training is ‘virtual’

What sounds like the cutting edge of science fiction is no fantasy; it is happening right now as you read this article

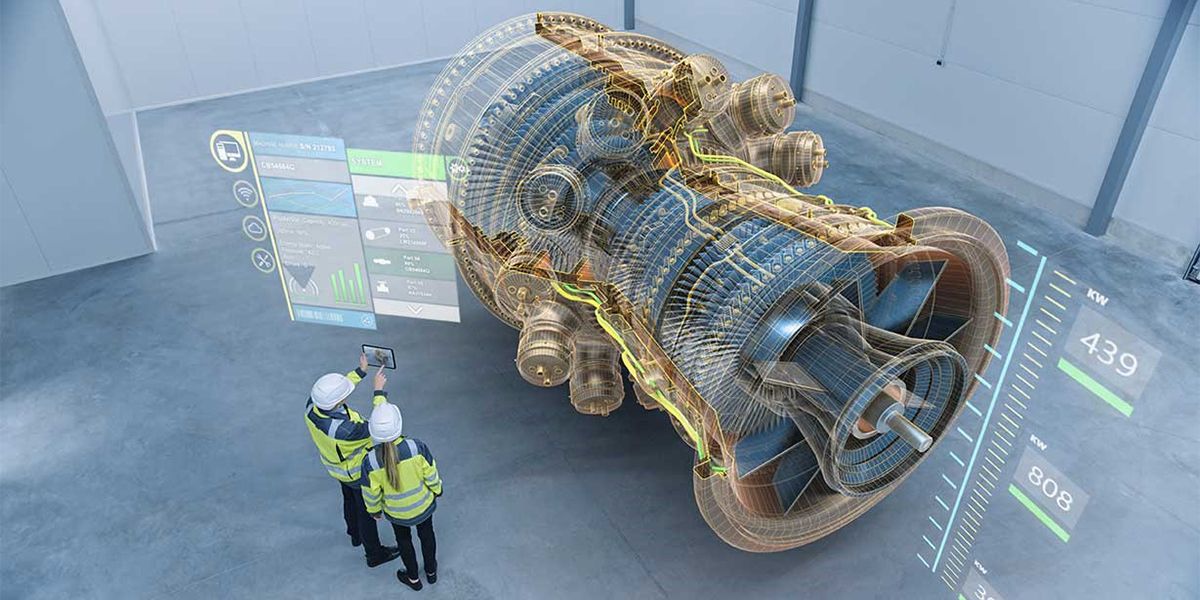

Imagine getting trained in a piece of equipment that is part of a critical production pipeline. What if you can get trained while you are in your living room? Sounds fantastic, eh. Well, I am not talking about e-learning or video-based training. Rather what if the machine is virtually in your living room while you walk around it and get familiar with its features? What if you can interact with it and operate it while being immersed in a virtual replica of the entire production facility? Yes, what sounds like the cutting edge of science fiction is no fantasy; it is happening right now as you read this article.

Ever heard of the terms ‘Augmented Reality’ or ‘Virtual Reality’? Welcome to the world of ‘Extended Reality’. What may seem like science fiction is in reality a science fact. Here we will try to explain how these technologies help in transforming the learning experience for you.

Let’s get to the aforementioned example. There was this requirement from a major pharmaceutical company where they wanted to train some of their employees on a machine. Simple, isn’t it? But here’s the catch. That machine was only one of its kind custom-built and that too at a faraway facility. The logistics involved were difficult. What if the operators can be trained remotely? That is when Sify proposed an Augmented Reality (AR) solution. The operators can learn all about the machine including operating it wherever they are. All they needed was an iPad which was a standard device in the company. The machine simply augments on to their real-world environment and the user can walk around it as if the machine were present in the room. They could virtually operate the machine and even make mistakes that do not affect anything in the real world.

What is the point of learning if the company cannot measure the outcome? But with this technology several metrics can be tracked and analysed to provide feedback at the end of the training. So, what was the outcome of the training at the pharma company? The previous hands-on method took close to one year for the new operators to come up to speed of experienced operators. But even then, new operators took 12 minutes to perform the task that experienced operators do in 5 minutes. The gap was a staggering 7 minutes. But using the augmented reality training protocols, all they needed was one afternoon. New operators came to up speed of experienced operators within no time. This means not only can more products reach deserving patients but also significantly reduces a lot of expenditure for the company. And for the user, all they need is a smartphone or a tablet that they already have. This is an amazingly effective training solution. Users can also be trained to dismantle and reassemble complex machines without risking their physical safety.

Not only corporates but even schools can also utilise this technology for effective teaching. Imagine if the student points her tablet on the textbook and voila, the books come alive with 3D models of a volcano erupting, or even make history interesting through visual storytelling.

Now imagine another scenario. A company needs their employees to work at over 100 feet high like on a tower in an oil rig or on a high-tension electricity transmission tower. After months of training and when employees go to the actual work site, some of them realize that they cannot work at the height.

They suffer from acrophobia or a fear of heights. They would not know of this unless they really climb to that height. What if the company could test in advance if the person can work in such a setting?

Enter Virtual Reality (VR). Using a virtual reality headset that the user can strap on to their head, they are immersed in a realistic environment. They look around and all they see is an abyss. They are instructed to perform some of the tasks that they will be doing at the work site. This is a safe way to gauge if the user suffers from acrophobia. Since VR is totally immersive, users will forget that they are safely standing on the floor and might get nervous or fail to do the tasks. This enables the company to identify people who fear heights earlier and assign them to a different task.

Any risky work environment can be virtually re-created for the training. This helps the employees get trained without any harm and it gives them confidence when they go to the actual work location.

VR requires a special headset and controllers for the user to experience it. A lot of different headsets with varying capabilities are already available for the common user. Some of these are not expensive too.

A multitude of metrics can be tracked and stored on xAPI based learning management systems (LMS). Analytics data can be used by the admin or the supervisor to gauge how the employee has fared in the training. That helps them determine the learning outcome and ROI (return on investment) on the training.

Training is changing fast and more effective using these new age technologies. A lot of collaborative learning can happen in the virtual reality space when multiple users can log on to the same training at the same time to learn a task. These immersive methods help the learner retain most of what they learnt when compared to other methods of training.

Well, the future is already here!

How OTT platforms provide seamless content – A Data Center Walkthrough

With the number of options and choices available, it almost seems like there’s no end to what you can and can’t watch on these platforms. It shouldn’t be difficult for a company like Netflix to store such a huge library of shows and movies at HD quality. But the question remains as to how they provide this content to so many people, at the same time, at such a large scale?

The India CTV Report 2021 says around 70% users in the country spend up to four hours watching OTT content. As India is fast gearing up to be one of the largest consumers of OTT content, players like Netflix, PrimeVideo, Zee5 et al are competing to provide relevant and user-centric content using Machine Learning algorithms to suggest what content you may like to watch.

With the number of options and choices available, it almost seems like there’s no end to what you can and can’t watch on these platforms. It shouldn’t be difficult for a company like Netflix to store such a huge library of shows and movies at HD quality. But the question remains as to how they provide this content to so many people, at the same time, at such a large scale?

Here, we attempt to provide an insight into the architecture that goes behind providing such a smooth experience of watching your favourite movie on your phone, tablet, laptop, etc.

Until not too long ago, buffering YouTube videos were a common household problem. Now, bingeing on Netflix shows has become a common household habit. With Data-heavy and media-rich content now being able to be streamed at fast speed speeds at high quality and around the world, forget about buffering, let alone downtime due to server crashes (Ask an IRCTC ticket booker). Let’s see how this has become possible:

Initially, to gain access to an online website, the data from the origin server (which may be in another country) needs to flow through the internet through an incredulously long path to reach your device interface where you can see the website and its content. Due to the extremely long distance and the origin server having to cater to several requests for its content, it would be near impossible to provide content streaming service for consumers around the world from a single server farm location. And server farms are not easy to maintain with the enormous power and cooling requirements for processing and storage of vast amounts of data.

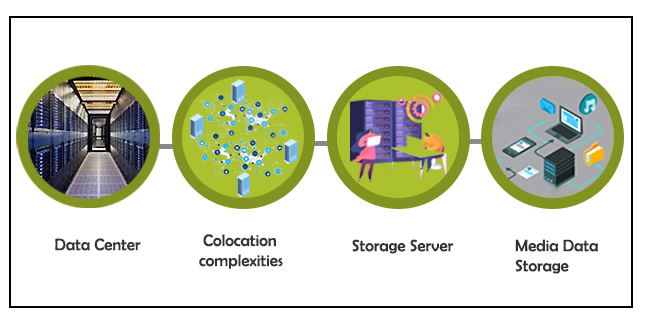

This is where Data Centers around the world have helped OTT players like Netflix provide seamless content to users around the world. Data Centers are secure spaces with controlled environments to host servers that help to store and deliver content to users in and around that region. These media players rent that space on the server rather than going to other countries and building their own and running it, and counter the complexities involved in colocation services.

How Edge Data Centers act as a catalyst

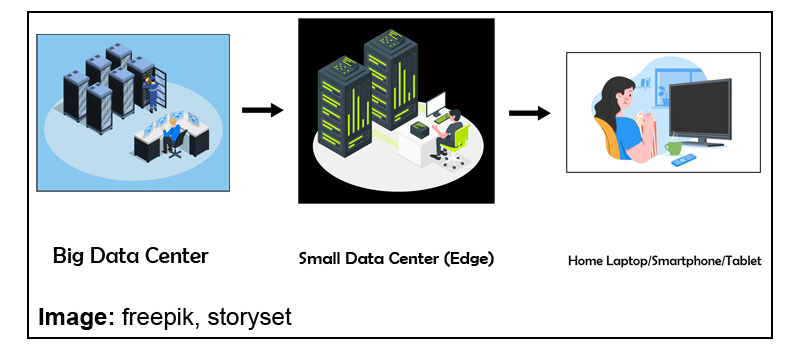

Hosting multiple servers in Data Centers can sometimes be highly expensive and resource-consuming due to multiple server-setups across locations. Moreover, delivering HD quality film content requires a lot of processing and storage. A solution to tackle this problem are Edge Data Centers which are essentially smaller data centers (which could virtually also be a just a regional point of presence [POP] in a network hub maintained by network/internet service providers).

As long as there is a POP to enable smaller storage and compute requirements and interconnected to the data center, the edge data center helps to cache (copy) the content at its location which is closer to the end consumer than a normal Data Center. This results in lesser latency (or time taken to deliver data) and makes the streaming experience fast and effortless.

Role of Content Delivery Networks (CDN)

The edge data center therefore acts as a catalyst to content delivery networks to support streaming without buffering. Content Delivery Networks (CDNs) are specialized networks that support high bandwidth requirements for high-speed data-transfer and processing. Edge Data Centers are an important element of CDNs to ensure you can binge on your favorite OTT series at high speed and high quality.

Although many OTT players like Sony/ Zee opt for a captive Data Center approach due to security reasons, a better alternative would be to colocate (outsource) servers with a service provider and even opt for a cloud service that is agile and scalable for sudden storage and compute requirements. Another reason for colocating with Service providers is the interconnected Data Center network they bring with them. This makes it easier to reach other Edge locations and Data Centers and leverage on an existing network without incurring costs for building a dedicated network.

Demand for OTT services has seen a steady rise and the pandemic, in a way, acted as a catalyst in this drive.

However, OTT platform business models must be mindful of the pitfalls.

Target audience has to be top of the list to build a loyal user base. New content and better UX (User Experience) could keep subscribers, who usually opt out after the free trial, interested.

The infrastructure and development of integral elements of Edge Data Centers are certain to take centerstage to enable content flow more seamlessly in the future that would open the job market to more technical resources, engineers and other professionals.

SAP Security – A Holistic View

With 90%+ of Fortune-500 organizations running SAP to manage their mission-critical business processes and considering the much-enhanced risk of cyber-security breach in today’s volatile and tech-savvy geo-socio-political world, security of your SAP systems deserves much more serious consideration than ever before.

The incidents like hacked websites, successful Denial-of-Service attacks, stolen user data like passwords, bank account number and other sensitive data are on the rise.

Taking a holistic view, this article captures possible ways, remediation to plug in all the possible gaps in various layers. (Right from Operating system level to network level to application level to Cloud and in between). The related SAP products/solutions and the best practices are also addressed in the context of security.

1. Protect your IT environment

Internet Transaction Server (ITS) Security

To make SAP system application available for safe access from a web browser, a middleware component called Internet Transaction Server (ITS) is used. The ITS architecture has many built-in security features.

Network Basics (SAP Router, Firewalls and Network Ports)

The basic security tools that SAP uses are Firewalls, Network Ports, SAP Router. SAP Web dispatcher and SAP Router are examples of application level gateways that can be used for filtering SAP network traffic.

Web-AS (Application Server) Security

SSL (Secure Socket Layer), is a standard security technology for establishing an encrypted link between a server and client. SSL authenticates the communication partners(server & client), by determining the variables of the encryption.

2. Operating System Security hardening for HANA

SAP pays high attention on the security topic. At least as important as the security of the HANA database is the security of the underlying Operating System. Many hacker attacks are targeted on the Operating System and not directly on the database. Once a hacker gained access and sufficient privileges, he can continue to attack the running database application.

Customized operating system security hardening for HANA include:

- Security hardening settings for HANA

- SUSE/RHEL firewall for HANA

- Minimal OS package selection (The fewer OS packages a HANA system has installed, the less possible security holes it might have)

For any server hardening, following procedure is used –

- Benchmark templates used for hardening

- Hardening parameters considered

- Steps followed for hardening

- Post-hardening test by DB/application team

The above procedures should help SAP customers in securing their servers (mostly on HP UNIX, SUSE Linux, RHEL or Wintel) from threats, known/unknown attacks and vulnerabilities. It also adds one more layer of security at the host level.

3. SAP Application (Transaction-level security)

SAP Security has always been a fine balancing act of protecting the SAP data and applications from unauthorized use and access and at the same time, allowing users to do the transactions they’re supposed to. A lot of thinking needs to go in designing the SAP authorization matrix taking into account the principle of segregation of duties. (SoD)

The Business Transaction Analysis (Transaction code STAD) delivers workload statistics across business transactions (that is, a user’s transaction that starts when a transaction is called (/n…) and that ends with an update call or when the user leaves the transaction) and jobs. STAD data can be used to monitor, analyse, audit and maintain the security against unauthorized transaction access.

4. SAP GRC

SAP GRC (Governance, Risk & Compliance) , a key offering from SAP has following sub-modules:

Access control

SAP GRC Access Control application enables reduction of access risk across the enterprise by helping prevent unauthorized access across SAP applications and achieving real-time visibility into access risk.

Process control –

SAP GRC Process Control is an application used to meet production business process and information technology (IT) control monitoring requirements, as well as to serve as an integrated, end-to-end internal control compliance management solution.

Risk Management

- Enterprise-wide risk management framework

- Key risk indicators, automate risk alerts from business applications

5. SAP Audit –

AIS (Audit Information System) –

AIS or Audit Information System is an in-built auditing tool in SAP that you can use to analyse security aspects of your SAP system in detail. AIS is designed for business audits and systems audits. It presents its information in the Audit Info Structure.

Besides this, there can be license audit by SAP and or by the auditing firm of your company (like Deloitte/PwC).

Basic Audit

Here the SAP auditors collaborate strongly with a given license compliance manager who is responsible for ensuring that the audit activities correspond with SAP’s procedure and directives. The number of basic audits undertaken is subject to SAP’s yearly planning, and it is worth noting that not all customers are audited annually.

The auditors perform below tasks (though they will vary a bit from organization to organization & from auditor to auditor):

- Analysis of the system landscape to make sure that all relevant systems (production and development) are measured.

- Technical verification of the USMM log files: correctness of the client, price list selection, user types, dialog users vs. technical users, background jobs, installed components, etc.

- Technical verification of the LAW: users’ combination and their count, etc.

- Analysis of engine measurement – verification of the SAP Notes

- Additional verification of expired users, multiple logons, late logons, workbench development activities, etc.

- Verification of Self Declaration Products, HANA measurement and Business Object.

SAP Enhanced Audit –

Enhanced audit is performed remotely and/or onsite and is addressed to selected customers. Besides the tasks undertaken in ‘Basic Audit’, it additionally covers –

- Checking interactions between SAP and non-SAP systems

- Data flow direction

- Details of how data is transferred between systems/users (EDI, iDoc, etc)

6. Security in SAP S/4 HANA and SAP BW/4 HANA

SAP S/4 HANA & SAP BW/4 HANA use the same security model as traditional ABAP applications. All the earlier explained components/security solutions are fully applicable in S/4 HANA as well as BW/4 HANA.

But these are increased security challenges posed by its component, SAP Fiori, which brings in mobility. But increased mobility means that data can be transferred over a 4G signal, which is not as secure and is easier to hack into. If a device falls into the wrong hands, due to theft or loss, that person could then gain unlawful access to your system. Its remediation is elaborated next.

7. Security in Fiori

While launching SAP Fiori app, the request is sent from the client to the ABAP front-end server by the SAP Fiori Launchpad via Web Dispatcher. ABAP front-end server authenticates the user when this request is sent. To authenticate the user, the ABAP front-end server uses the authentication and single sign-on (SSO) mechanisms provided by SAP NetWeaver.

Securing SAP Fiori system ensures that the information and processes support your business needs, are secured without any unauthorized access to critical information.

The biggest threat for an SAP app is the risk of an employee losing important data of customers. The good thing about mobile SAP is that most mobile devices are enabled with remote wipe capabilities. And many of the CRM- related functions that organizations are looking to use on mobile phones, are cloud-based, which means the confidential data does not reside on the device itself.

SAP Afaria, one of the most popular mobile SAP security providers, is used by many large organizations to enhance the security in Fiori. It helps to connect mobile devices such as smartphones and tablet computers. Afaria can automate electronic file distribution, file and directory management, notifications, and system registry management tasks. Critical security tasks include the regular backing up of data, installing patches and security updates, enforcing security policies and monitoring security violations or threats.

8. SAP Analytical Cloud (SAC)

SAP Analytics Cloud (or SAP Cloud for Analytics) is a software as a service (SaaS) business intelligence (BI) platform designed by SAP. Analytics Cloud is made specifically with the intent of providing all analytics capabilities to all users in one product.

Built natively on SAP HANA Cloud Platform (HCP), SAP Analytics Cloud allows data analysts and business decision makers to visualize, plan and make predictions all from one secure, cloud-based environment. With all the data sources and analytics functions in one product, Analytics Cloud users can work more efficiently. It is seamlessly integrated with Microsoft Office.

SAP Analytical Cloud use the same security model as traditional ABAP applications.

The concept of roles, users, teams, permissions and auditing activities are available to manage security.

9. Identity Management

SAP Identity Management is part of a comprehensive SAP security suite and covers the entire identity lifecycle and automation capabilities based on business processes.

It takes a holistic approach towards managing identities & permissions. It ensures that the right users have the right access to the right systems at the right the time. It thereby enables the efficient, secure and compliant execution of business processes.

10. IAG – (Identity Access Governance) for Cloud Security

SAP Identity Access Governance (IAG) is a multi-tenant solution built on top of SAP Business Technology Platform (BTP) and SAP’s proprietary HANA database. It is SAP’s latest innovation for Access Governance for Cloud.

It provides out of the box integration with SAP’s latest cloud applications such as SAP Ariba, SAP Successfactors, SAP S/4HANA Cloud, SAP Analytics Cloud and other cloud solutions with many more SAP and non-SAP integrations on the roadmap.

11. SAP Data Custodian

To allay the fears of data security in SAP systems hosted on Public Cloud, SAP introduced its latest solution called ‘SAP Data Custodian’. It is an innovative Governance, risk and compliance SaaS solution which can give your organization similar visibility and control of your data in the public cloud that was previously available only on-premise or in a private cloud.

- It allows you localize your data and to restrict access to your cloud resources and SAP applications based on user context including geo-location and citizenship

- Restricts access to your data, including access by employees of the cloud infrastructure provider

- Puts encryption key management control in your hands and provides an additional layer of data protection by segregating your keys from your business data

- Uses tokenization to secure sensitive database fields by replacing sensitive data with format-preserving randomly generated strings of characters or symbols, known as tokens

- With data discovery you can scan for sensitive data categories such as SSN / SIN, national ID number, passport number, IBAN, credit card, email address, ethnicity, et cetera, based on pattern determination and machine learning

12. Futuristic approach towards securing ERP systems

Driven by the digital transformation of businesses and its demand for flexible computing resources, the cloud has become the prevalent deployment model for enterprise services and applications introducing complex stakeholder relations and extended attack surfaces.

Mobility (access from smart phones/tablets) & IOT (Internet of things) brought in new challenges of scale (“billions of devises”) and required to cope with their limited computational and storage capabilities asking for the design of specific light-weight security protocols. Sensor integration offered new opportunities for application scenarios, for instance, in distributed supply chains.

Increased capabilities of sensors and gateways now allow to move business logic to the edge, removing the backend bottleneck for performance.

SAP has been investing a lot in drawing, refining its roadmap for security for the future.

It used McKinsey’s 7S strategy concept to review SAP Security Research and adapt supporting factors. Secondly, it assessed technology trends provided by Gartner, Forrester, IDC and others to look into probable security challenges.

As per SAP’s research, today’s big challenges in cybersecurity emanate around ML (Machine Learning). The trend is ML anywhere! ML itself provides a new attack vector which needs to be secured. In addition, ML is used by attackers and so needs to be used by us to better defend our solutions.

Machine Learning that has the most significant impact on the security and privacy roadmap these days, both providing the power of data to design novel security mechanisms as well as requiring new security and privacy paradigms to counter Machine Learning specific threats.

Deceptive applications is another trend SAP foresees. Applications must be enabled to identify attackers and defend themselves.

Thirdly, still underestimated, SAP foresees the attacks via Open Source or Third-party software. SAP has been adapting its strategy accordingly to tackle those new trends.

Wishing all SAP Customers a Happy, Safe and Compliant SAP experience!

SAP Migrations to AWS Cloud using Cloud Endure Migration Tool

Enterprises migrating SAP workloads to AWS are looking for an as-is migration solution that are readily available. Earlier, enterprises used the traditional method of SAP backup and restore for migration or AWS-native tools such as AWS Server Migration Service to perform this type of migration. CloudEndure Migration is a new AWS-native migration tool for SAP customers.

Enterprises looking to host a large number of SAP systems onto AWS can use CloudEndure Migration without worrying about compatibility, performance disruption or long cutover windows. You can perform any re-architecture after your systems start running on AWS.

Solution Overview

CloudEndure Migration simplifies, expedites and reduces the cost of such migrations by offering a highly automated as-is migration solution. This blog demonstrates how easy it is to set up CloudEndure Migration and the steps involved in migrating SAP systems from source to AWS environment.

CloudEndure Migration Architecture

The following diagram shows the CloudEndure Migration architecture for migrating SAP systems.

Major steps for this migration are as below:

- Agent Installation

- Continuous Replication

- Testing and Cutover

CloudEndure helps you overcome the following migration challenges effectively:

- Diverse infrastructure and OS type

- Legacy application

- Complex database

- Busy continuously changing workloads

- Machine compatibility issues

- Expensive cloud skills required

- Downtime and performance disruptions

- Tight project timelines and limited budget

Use Cases

The most common uses cases for CloudEndure Migration are:

- Lift and Shift, then optimize

- Vast majority of Windows/Linux servers when agent can be installed on source machine

- Replicating Block Storage devices like SAN, iSCSI, Physical, EBS, VMDK, VHD

- Replicating full machine/volume

Benefits

Access to advanced technology

Simplify operations and get better insights with AWS Application Migration Services integration with AWS Identity and Access Management (IAM), Amazon CloudWatch, AWS CloudTrail, and other AWS Services.

Minimal downtime during migration

With AWS Application Migration Service, you can maintain normal business operations throughout the replication process. It continuously replicates source servers, which means little to no performance impact. Continuous replication also makes it easy to conduct non-disruptive tests and shortens cutover windows.

Reduced costs

AWS Application Migration Service reduces overall migration costs as there is no need to invest in multiple migration solutions, specialized cloud development, or application-specific skills. This is because it can be used to lift and shift any application from any source infrastructure that runs supported operating systems (OS).

Conclusion

This blog discussed how Sify can help its SAP customers migrating to AWS Cloud using CloudEndure tool. Sify would be glad to help your organization as your AWS Managed Services Partner for a tailor-made solution involving seamless cloud migration experience and for your Cloud Infrastructure management thereafter.

CloudEndure Migration software doesn’t charge anything as a license fee to perform automated migration to AWS. Each free CloudEndure Migration license allows for 90 days of use following agent installation. During this period, you can start the replication of your source machines, launch target machines, conduct unlimited tests, and perform a scheduled cutover to complete your migration.

Automated Recovery of SAP HANA Database using AWS native options

This blog exclusively covers the options available in AWS to recover SAP HANA Database with low cost and without using native HSR tool of SAP. With a focus on low costs, Sify recommends choosing a cloud native solution leveraging EC2 Auto Scaling and AWS EBS snapshots that are not feasible in an on-premises setup.

Solution Overview

The Restore process leveraging Auto Scaling and EBS snapshots works across availability zones in a region. Snapshots provide a fast backup process, independent of the database size. They are stored in Amazon S3 and replicated across Availability Zones automatically, meaning we can create a new volume out of a snapshot in another Availability Zone. In addition, Amazon EBS snapshots are incremental by default and only the delta changes are stored since the last snapshot. To create a resilient highly available architecture, automation is key. All steps to recover the database must be automated in case something fails or goes wrong.

Autoscaling Architecture

The following diagram shows the Auto Scaling architecture for systems in AWS.

Use Case

EBS Snapshots

Prior to enabling EBS snapshots we must ensure the destination of log backups are written to an EFS folder which is available across Availability Zones.

Create a script prior to the command to be executed for an EBS snapshot. By using the system username and password, we make an entry into the HANA backup catalog to ensure that the database is aware of the snapshot backup. We use the snapshot feature to take a point in time and crash consistent snapshot across multiple EBS volumes without a manual I/O freeze. It is recommended to take the snapshot every 8-12 hours.

Now the log backups are stored in EFS and full backups as EBS snapshots in S3 and both sets are available across AZs. Both storage locations can be accessed across AZs in a region and are independent of an AZ.

EC2 Auto Scaling

Next, we create an Auto Scaling group with a minimum and maximum of one instance. In case of an issue with the instance, the Auto Scaling group will create an alternative instance out of the same AMI as the original instance.

We first create a golden AMI for the Auto Scaling group and the AMI is used in a launch configuration with the desired instance type. With a shell script in the user data, upon launch of the instance, new volumes are created out of the latest EBS snapshot and attached to the instance. We can use the EBS fast snapshot restore feature to reduce the initialization time of the newly created volumes.

If the database is started now (recently), it would have a crash consistent state. In order to restore it to the latest state, we can leverage the log backup stored in EFS which is automatically mounted by the AMI. Additional Care to be taken so that the SAP application server is aware of the new IP of the restored SAP HANA database server.

Benefits

- Better fault tolerance – Amazon EC2 Auto Scaling can detect when an instance is unhealthy, terminate it, and launch an instance to replace it. Amazon EC2 Auto Scaling can also be configured to use multiple Availability Zones. If one Availability Zone becomes unavailable, Amazon EC2 Auto Scaling can launch instances in another one to compensate.

- Better availability – Amazon EC2 Auto Scaling helps in ensuring the application always has the right capacity to handle the current traffic demand.

- Better cost management – Amazon EC2 Auto Scaling can dynamically increase and decrease capacity, as needed. Since AWS has pay for the EC2 instances, billing model can be used to save money, by launching instances only when they are needed and terminating them when they aren’t.

Conclusion

This blog discussed how Sify can help SAP customers to save cost by automating recovery process for HANA database, using native AWS tools. Sify would be glad to help your organization as your AWS Managed Services Partner for a tailor-made SAP on AWS Cloud solution involving seamless cloud migration experience and for your Cloud Infrastructure management thereafter.

Fast-track your SAP’s Cloud Adoption on AWS, with Sify

Cloud computing (which later became known as just Cloud), one of the technology trends since last few years, has become a game-changer. Cloud offered a platform for organizations not just to host their applications but also to maintain it / manage it and charge organizations on a pay-per-usage model. This translated into getting rid of the Capex (Capital expenditure on hardware/servers) and instead, opting for the flexible Opex (Operating Expenses) model which also helped organizations in slashing the manpower budget.

The biggest advantage of Cloud is its scalability – freedom to add/reduce storage/compute/network bandwidth as per the need within an agreed SLA-framework.

The rise of Cloud adoption led to two categories:

- Cloud as a hosting platform

- Cloud-based software/applications

Cloud as a hosting platform

This is more popularly known as Platform-as-a-Service (PaaS) or Infrastructure-as-a-Service (IaaS) wherein the service provider offers bundled services of Compute, Storage, Network & Security requirements.

Cloud providers deliver a computing platform, typically including operating system, programming language execution environment, database, and web server. Application developers can develop and run their software solutions on a cloud platform without the cost and complexity of buying and managing the underlying hardware and software layers.

Based on the deployment options, Cloud is classified under Public, Private and Hybrid Cloud, which vary due to security considerations.

Why AWS

With some PaaS providers like AWS, the underlying compute and storage resources scale automatically to match application demand so that the cloud user does not have to allocate resources manually. They use a load balancer which distributes network or application traffic across a cluster of servers. Load balancing improves responsiveness and increases availability of applications.

As per a 2020 IDC study, over 85% of customers report cost reduction by running SAP on AWS.

Get consulting support, training, and services credits to migrate eligible SAP workloads with the AWS Migration Acceleration Program (MAP).

AWS has been running SAP workloads since 2008, meaningfully longer than any other cloud provider. AWS offers the broadest selection of SAP-certified, cloud-native instance types to give SAP customers the flexibility to support their unique and changing needs.

Whether you choose to lift and shift existing investments to reduce costs, modernize business processes with native AWS services, or transform on S/4HANA, reimagining is possible with SAP on AWS.

When you choose AWS, you will be joining 5,000+ customers who trust the experience, technology, and partner community of AWS to migrate, modernize, and transform their SAP landscapes.

AWS & Sify collaboration

While AWS has been a global leader in Public Cloud services, in India Sify Technologies (one of the pioneers in the ICT domain & India’s first SSAE-16 Cloud certified provider) had designed its own Cloud called ‘CloudInfinit’ way back in 2013 and since then has been helping diverse organizations in moving their ERP applications, especially SAP workloads to its Cloud, seamlessly.

With Sify’s cloud@core strategy, customers can enjoy all facets of Cloud with complete visibility and control.

SIFY’s SAP deployment flexibility ON CLOUD

SAP on AWS hybrid cloud solutions

Use Cases

- Lifecycle Distribution: Separate Development and Test Environments

- Workload Distribution

- High Availability Deployments

Business Benefits

- Best of breed performance with Hybrid Cloud configuration

- Efficient integrations with extended ecosystems

- Compliance with data sovereignty

- Business continuity with resilience

- Simplicity with self service

- Operational efficiency with reduced IT administration

- Cost reduction with on-demand bursting to public clouds

Migration methodology

For SAP Migrations from on-premises or from any other Cloud to AWS, depending on various parameters like Prod. database size, available downtime window, source/target operating system/database, connectivity/link speed etc., we have been using various ways for seamless migration briefly explained below.

SAP SYSTEMS monitoring

Once SAP workloads are moved to AWS, Sify’s Managed Services takes care of every aspect, including monitoring the Operating System, Database, Connectivity, Security, Backup etc. as per the agreed SLAs. It also monitors the mission-critical SAP systems closely as depicted in diagram below.

Conclusion

Cloud, the scalable, flexible and yet robust hosting platform allows you to use your ERP workloads as well as SaaS applications effectively. Going by the increasing number of customers moving to Cloud, it is certain that Cloud is not vaporware, it is here to stay and only to grow bigger and safer by the day! It would also necessitate organizations to chart out a Smart Cloud strategy.

Embrace the Hybrid Cloud with VMC on AWS

According to Gartner, most of midsize and enterprise customers will be adopting a hybrid or a multi-cloud strategy. Companies have already realized that in order to move to public cloud, it is important to structure workload in hybrid cloud model.

Companies must understand that hybrid IT is not an easy methodology and therefore they must organize their existing IT resources and application workloads both on-premises and in public cloud efficiently to accomplish the goals of hybrid model. Additionally, they must also look for right skills for different private/public clouds and different sets of tools from respective cloud providers.

At Sify, we define some guidelines and structure to right fit your workloads in Hybrid Cloud. Please read on to know how Sify can help customers identify the right cloud (from the set of appropriate private and/or private clouds) for different sets of workloads.

Unique Challenges in Hybrid Cloud

In hybrid cloud, there are some unique challenges that businesses need to address first. key challenges that need to be addressed while designing solution on Hybrid Cloud are mentioned herein.

- Generally, it is very difficult to identify the most suitable cloud environment and manage the cost of different clouds.

- Though all cloud environments are powerful in providing features like scalability and elasticity, however the process of managing a hybrid cloud is quite a complex task.

- Security Risks: The threat of data breach or data loss is perhaps the biggest challenge faced during hybrid cloud adoption. Generally, companies prefer on-premises private cloud to protect data locally. However, in order to ensure top-tier security of data and applications on hybrid cloud, companies must draft robust data protection policies and procedures.

Identify Applications for Hybrid Cloud

It is very important to identify applications for hybrid cloud, and your decision must be based on application’s architecture, behavior and user accessibility. Below are some key points to remember while designing Hybrid Cloud strategy: –

- Consider the compatibility of applications before deciding whether you want to continue running the applications in an on-prem cloud environment or migrate them to Public Cloud.

- Identify the running cost/budget for an application and compare it with different cloud providers to choose the most suitable cloud platform that suits your budgetary outlines and business requirements.

- Pay due attention to licensing requirements before migrating to Public/Private Cloud because a minor loophole in this aspect can have a major impact on the overall business.

- Re-look experience of existing IT team to manage different Private/Public cloud seamlessly.

Why VMC on AWS as Hybrid Cloud Choice?

VMware Cloud (also called as VMC) on AWS brings VMware’s enterprise-class Software-Defined Data Centre software to the AWS Cloud and enables customers to run production applications across VMware vSphere-based private, public, and hybrid cloud environments, with optimized access to AWS services.

AWS is VMware’s preferred public cloud partner for all vSphere-based workloads. The VMware and AWS partnership delivers a faster, easier, and cost-effective path to the hybrid cloud while allowing customers to modernize applications enabling faster time-to-market and increased innovation.

New Amazon EC2 i3en.metal instances for VMware Cloud on AWS, powered by Intel Xeon Scalable processors, deliver high networking throughput and lower latency so you can migrate data centres to the cloud for rapid data centre evacuation, disaster recovery, and application modernization.

VMC on AWS can be the right choice because VMware is trusted as a trusted virtualization platform for many years in the industry and enterprise VMware ensures that all your applications run seamlessly. Plenty of companies are already running on VMware platform and have applications running on it. Below are some key identifiers for VMC on AWS:

- VMware SDDC is running on bare metal, which is delivered, operated, supported by VMware

- On-demand scalability and flexible consumption

- Full operational consistency with on-premises SDDC

- Seamless workload portability and hybrid operations

- Global AWS footprint, reach, and availability

- Native AWS services accessibility

How VMC on AWS Solution Works?

VMware Cloud on AWS infrastructure runs on dedicated, single tenant hosts provided by AWS in a single account. Each host is equivalent to an Amazon EC2 I3.metal instance (2 sockets with 18 cores per socket, 512 GiB RAM, and 15.2 TB Raw SSD storage). Each host is capable of running many VMware Virtual Machines (tens to hundreds depending on their compute, memory and storage requirements). Clusters can range from a minimum 3 hosts up to a maximum of 16 hosts per cluster. A single VMware vCenter server is deployed per SDDC environment.

How do We Access AWS Services?

For companies that are using VMware Cloud on AWS (VMC) as production environment for their business-critical application, it will require connectivity to an AWS account. This is enabled by an AWS elastic network interface which provides a 25Gbps connectivity between VMC and AWS. Applications deployed on VMC can leverage native AWS services for storage, EC2 instances, RDS Databases, load balancing and DNS routing, etc., providing customers with the best of both worlds. These native services can be accessed from applications deployed on VMC and include:

- Simple Storage Service (S3)

- Elastic File Service (EFS)

- Amazon Relational Database Service (RDS)

Sify’s Value Propositions to Help Customer Who are Looking for Hybrid Cloud Solutions

Sify has been in the Cloud industry since 2012 and has its own cloud for enterprise customers which can be tailored based on the requirement. Over the last few years, Sify has been involved in large enterprise customer requirement understanding, solutions & implementation across Private, Public & Hybrid Clouds.

Sify has dedicated, experienced and certified SMEs involved during solution stage and implementation and to provide operations services which will enable businesses to accelerate the adoption of Hybrid Cloud.

Our approach is to identify potential use cases for Hybrid Cloud options for VMC on AWS and native AWS services during the initial assessment and design phase, and we also suggest the most suitable services that can help customers meet the strategic goals. These services include the following:

- Discovery Workshop

- Assessment

- Build and Migrate

Feel free to contact us to provide your organization a tailored solutions with the required support across the various stages of the setup of VMC & AWS and migration of workloads.

Leveraging CloudEndure in the migration to AWS Cloud

With the increased demand in scalability and flexibility of the infrastructure for organization to ramp up and speed up the go-to-market approach, it has become necessary to adopt the Public Cloud which can tackle this challenge efficiently. Therefore, businesses are looking for simple, reliable, and rapid migration of on-premises workload to Public Cloud with minimal disruption. Sify, being an AWS Advance Partner, has successfully carried out many large cloud migrations for several customers using CloudEndure and the best practices it brings in.

CloudEndure is a SaaS service offering from AWS to migrate workload from any source (physical, virtual, or private/public cloud) to AWS, from one AWS region to other AWS account (within same Account), and across different AWS accounts. It uses block level continuous replication to replicate data on to the target AWS environment.

To replicate data from source machine to target machine, we need to install agent on the source machine and should have the required CloudEndure license for migration. The migration license has expiry date post which the data replication stops.

The Continuous Data Replication task is performed and pushed to the staging area (includes Replication Server, EBS Volume, S3 Storage, Subnets and IP). The CloudEndure Service Manager ensures the co-ordination among the Source Machine, Replication Server and the Target Server.

Three points of contact for CloudEndure’ s components with the external network:

- The CloudEndure Agent needs to communicate with the CloudEndure Service Manager.

- The CloudEndure Agent needs to communicate with the CloudEndure Replication Servers.

- CloudEndure Replication Servers need to communicate with the CloudEndure Service Manager and S3.

CloudEndure helps you overcome below migration challenges effectively:

- Diverse infrastructure and OS type

- Legacy application

- Complex database

- Busy continuously changing workloads

- Machine compatibility issues

- Expensive cloud skills required

- Downtime and performance disruptions

- Tight project timelines and limited budget

Best Migration Practices that CloudEndure recommends:

Planning

- Begin by mapping out a migration strategy that identifies clear business motives and use cases for moving to the cloud.

- Migrate in phases/waves or conduct a pilot light migration in which you start with the least business-critical workloads.

- Do not perform any reboots on the source machines prior to a cutover.

- When scheduling your cutover, ensure that you allow enough time for data replication to complete and for all necessary testing to be carried out.

Licensing

- Ensure that you have sufficient migration licenses for your project.

- CloudEndure Migration license is free for 90 days of use following agent installation on source machine.

- While the use of CloudEndure Migration is free, you will incur charges for any AWS infrastructure that is provisioned during migration and after cutover, such as compute (EC2) and storage (EBS) resources.

Testing

- Check and optimize the network needed for migration.

- Perform a test at least one week before you plan to migrate your source This time frame is intended for identifying potential problems and solving them, before the actual cutover takes place.

- Train the staff early on CloudEndure and mitigate any risk during testing phase only.

- Ensure connectivity to your target machines (using SSH for Linux or RDP for Windows) and perform acceptance tests for your application.

Successful Implementation

- Cutover the machine on planned date.

- Carry out the acceptance test for the application migrated and functionality.

- Remove Source machines from the console after the cutover has been completed in order to clean up the staging area, reduce costs, and remove no longer needed replication resource.

Use cases

The Most Common uses cases for CloudEndure Migration are:

- Lift and Shift, then optimize

- Vast majority of Windows/Linux server when agent can be installed on source machine

- Replicating Block Storage devices like SAN, iSCSI, Physical, EBS, VMDK, VHD

- Replicating full machine/volume

Conclusion

You must keep these above points in mind while migrating to AWS using CloudEndure to reap the desirable outcomes. Sify would be glad to help your organization as your AWS Managed Services Partner for a tailor-made solution involving seamless, cloud migration experience and for your Cloud Infrastructure management thereafter.

Re-Architect your Network with Sify

Integrate your Cloud, Core, and Edge

With the advent of IoT, pervasive mobility, and growing cloud service adoption, the network has become increasingly distributed, and the need for faster compute and connectivity at the edge has become more pronounced.

As organizations increasingly drive digital transformation initiatives and adopt hybrid multi-cloud, the network must be rearchitected to support new-age topologies. Hence, Network Transformation gains predominance. Network Transformation and re-architecture must play out at all the 3 points of presence of the Network – at the Network Core, Network Management layer, and the Network Edge.

Let us look at the significance of the Transformation at these 3 layers and the contribution that Sify is bringing at each of these points of presence.

Transformation at the Network Core

With Cloud adoption, Data Center is no more the Core of the network. As enterprises are moving their applications to the cloud on consumption-based models for enhanced business productivity, it has become important to secure connectivity to the Cloud and extend the Quality of Service to a hybrid multi-cloud environment. Hence, the transformation of the network at the Core is essential for improved agility, enhanced productivity, and scalability.

Sify’s Transformation Services at the Core are designed to help customers harness the true potential of cutting-edge cloud, digital, and network technologies. We help our customers accomplish their transformation goals and meet dynamic business requirements capably by providing Cloud Interconnect networks, which enable customers to establish direct connectivity between their private and public cloud infrastructure and fully realize the benefits of hybrid cloud architecture. With our Hyperscale Cloud partnerships and the Cloud Interconnect Networks, combined with expertise to deploy technologies like SDWAN & NFV, we hold a distinct level of expertise in network integration and transformation for a hybrid multi-cloud environment.

Transformation at the Network Management and Control Layer

As networks become more & more complex, organizations nowadays are leveraging multiple network service providers, and they have distributed nature of applications and end-users. This has resulted in a very complex network architecture. Moreover, monitoring and management of these complex and distributed networks and network elements have become very critical. Network Management & Control requires a distinct level of expertise, advanced tools, and automation platforms, and hence makes good business sense to outsource the management to an expert who has the benefit of scale, experience, and toolsets to deliver the same. The customer can in turn focus on his core business.

Empowered with a robust Network Operating Center, Sify offers a wide spectrum of Network Consolidation, Network Transformation, and Operations Outsourcing solutions. Sify can manage customer’s as-is WAN network architecture, provide 24×7 monitoring of links, undertake incident management, change management, and can yet ensure unified SLA management across multiple service providers in a single-window provision. Not only this, but Sify also helps customers in network study & assessment and consulting for Network Re-Architecture and Managed Services.

Transformation at the Network Edge

Network Edge is the bridge between an organization and its end customers to deliver enhanced user experience and employee productivity. It is a strategic gateway to connect widely distributed organizations. The traditional networks cannot accommodate the new IoT devices, sensors, and plethora of Edge devices that are connected and managed at the Edge. Therefore, it is crucial to adopt transformation of the network at the edge to meet the requirements of the end devices and make them an integral part of the Enterprise Networks.

Sify Edge Connect Services include advisory services like an assessment of customer requirement with respect to coverage and performance. Further, we ensure end-to-end implementation of customer’s WiFi network, which includes configuring guest access and incorporating employee authentication & access. Our highly secure platform provides insight into user patterns and analytics such as the location of users, applications browsed, etc. Sify also provides deep visibility and control of the performance and usage of applications. In addition, Sify Edge Services include IoT services and aggregation and integration of the same with the Enterprise Network.

While rearchitecting your network, it is important to include aspects connecting the above three points of presence of users and applications. This will help you architect a network that delivers efficiency and is future-ready. However, the majority of organizations lack the necessary skills and expertise to envision and manage such a complex transformation, and that is where Sify Technologies can be your partner in this transformation journey.

What makes Sify the preferable partner for Network Transformation?

Sify is the largest Information and Communications Technology service provider in India, serving 10,000+ businesses across multiple industries with its impeccable Cloud and Data Center services, Network services, Security services, Digital Services, and Application Services. Our industry-wide experience of working with diverse clients, skilled workforce to manage varied network transformation projects, and the Technology Partner ecosystem, makes us the most trusted Network Transformation partner for India Enterprises.

Today, Network Integration, Network Transformation, and Managed Services are the key differentiators. That’s where Sify can help businesses achieve their strategic goals. As a strong, capable ICT transformation partner, Sify brings in the integrated skillsets, processes, and tools that are required for a seamless transition in a cost-effective way. Add to this, Sify’s engineering capabilities are the best in class and help our customers design innovative solutions that are custom-built to suit their business needs.